For the record, my MidJourney profile is here. But it may not do you much good, since the site is, tiresomely, set up to drop you back to the homepage unless you’re a logged in user. OpenAI’s DALL-E 2 demo does this as well, and doesn’t even have a user profile page to drop you back from. Stable Diffusion is more approachable, letting anyone just rock up and play, but also has no user profile, so you can’t see my SD noodling either. Not that there’s much there anyway, since MidJourney is easily the best of the three, at least to my eyes, and the one I’ve most felt like poking at.

If you’ve managed to avoid all knowledge of these beasts, well, first of all: congratulations. You could just stop reading now and preserve your virginity for a little while longer. But where’s the fun in that?

All three are deep learning based—I refuse to say AI—image generators, which yoke together a large language model for text prompting with (as far as I can tell) a diffusion model to iteratively & probabilistically “reconstruct” an image that might correspond to that prompt from a “degraded” starting point consisting entirely of noise. These things are trained on an absolute fuckton of images and text — very roughly speaking, all the art ever, pace systematic selection biases — and they function as sophisticated plagiarism engines, constructing delicate collages of everything that’s gone before.

Heck, who don’t?

The first thing to say about these models, as with related generative models in other domains such as slavering hypemonster ChatGPT, is that they are absolutely fucking incredible. Gobsmacking. Straight up demonstrations of Clarke’s third law.

For example, this is MidJourney V4’s interpretation of the following prompt:1,2

beware the rise of fascism amongst the clangers

The degree of incredibly specific knowledge and detail and structure apparent in these generated images, given the terse and obscure text that prompted them, is almost beyond belief. There’s a lot one might quibble with (and I’ll get to the quibbling soon) but being able to go from those 8 words to these pictures is unequivocally a remarkable achievement.

I’ve harped on many times before about our metastasising theory of mind, the human tendency to impute understanding and knowledge and agency to ML systems — and to everything else — and it’s really hard to avoid doing that when faced with something like MidJourney. Holy shit, it knows what a Clanger is! It knows what fascism is! It can design uniforms for fascist Clangers!

Of course, it knows nothing of the kind.

But what it does know, or at least encode, is in some ways even more remarkable: it makes a surprisingly decent attempt to map the space of human images, or anyway some culturally-localised portion of that space, complete with an index of conceptual and linguistic landmarks with which to navigate that space. These landmarks can be extremely precise, and also bonkers.

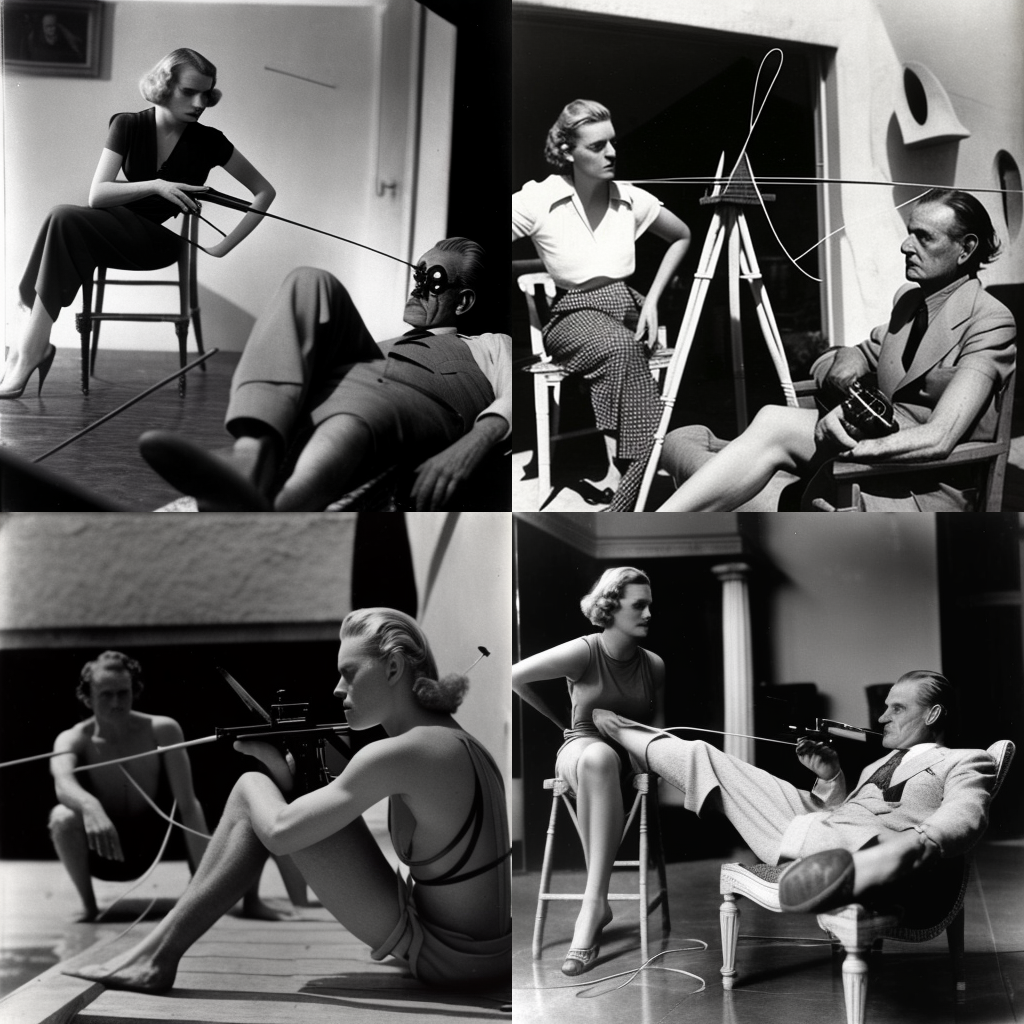

Lee Miller accidentally shooting Man Ray in the foot with a speargun

I find this set of images in particular utterly dazzling, and I’d urge you to take a moment to appreciate the wonder of them. Obviously — obviously — they don’t actually show the requested thing at all. Every individual detail is wrong and the different parts of each picture mostly don’t add up. You can see at least some of the sources and some of the joins. And yet they capture the spirit of the prompt perfectly. There’s an alignment of preoccupations, perhaps, a shared corner of the manifold.3

a disquieting sense of being surreptitiously observed by sinister ducks, as painted by rene magritte

MidJourney has an excellent grasp of some visual and artistic styles. It also has a fine eye for cliché and a hackneyed sense of composition. We might speculate that stock photos and hackwork commercial art feature heavily in its training set — there are just so many humdrum images out there, how could the model fail to pick up a few banal habits?

at the tombstone saloon bar wyatt earp decides it’s time to open another bottle of coca cola

Because images are, a lot of the time, structurally related to the physical world, or at least informed by our experience of it, there’s a sort of loose correlation between having a map of the space of images and having a map of reality, but it’s extremely fucking loose. And the waypoints for the two are often not in sync.

Images are all surface, and MidJourney is nothing if not superficial. It struggles with physical structure, with perspective, with physiology and anatomy. As with drag, the hands and feet give it away. It struggles with text. Its domain is the pictorial, not the material or orthographic — but it also has no explicit grasp of pictorial structure. A colleague tried to browbeat it into particular layouts, specifying things in the background or foreground, things to one side or in corners of the frame; almost entirely in vain. The model doesn’t put things in the middle because you tell it to, but because that’s how things tend to be done.

Realistic high angle photographic still from a CCTV of a very cramped riotous office party in a small room. Boris Johnson, wearing a tinsel scarf, is visible in the background. He is raising his glass and shouting

The ethics of all this are, of course, an absolute goatfuck of biblical proportions. The training data is appropriated wholesale with nary a thought of consent. Its usage tramples all over the employment prospects of talented creators. The model thinks nothing of fabricating evidence of wrongdoing. The image above4 wouldn’t pass the most basic forensic examination, but nobody would bat an eyelid if it was printed on the front page of tomorrow’s Metro. And sure, that’s partly because everyone seeing it would recognise the truth of what it portrays, even if that portrayal is literally false. But isn’t that the essence of propaganda?

Anyway, MidJourney is fun. You should give it a whirl. Something to play with as the world burns down around us.