TL;DR: don’t ignore allocation errors from TensorFlow.

I’ve recently been wrestling with some of the hot new things in the trendy world of machine learning. In particular TensorFlow, a system for building graphs of operations on multidimensional chunks of data; and Keras, a programming interface for TensorFlow that makes that building process a bit less irksome for many common neural network structures.

Both systems are very much in active development, and as moving targets can be tricky to hit. Building and installing TensorFlow in particular is a pain in the arse for all but the most basic purposes. (Neural networks require a lot of number crunching, even by the generally greedy standards of machine learning, so you probably need to tailor them to your hardware. Running lots of parallel computations on high-end graphics cards is de rigueur. There’s a limit to the usefulness of the off-the-peg versions.)

Nevertheless, everything went pretty smoothly for the first couple of weeks. I’ve been porting models previously implemented in Matlab, a popular programming environment for science and engineering. There’s a lot to be said for Matlab, but dear god does it lend itself to shitty code. As a rule, scientific coding tends to be pretty shoddy from the get-go, and opportunities to make it shoddier are embraced with gay abandon. That’s not the main reason for moving away from Matlab though. The main reason is that if you want to actually deploy it for real world applications it becomes eye-wateringly expensive. TensorFlow, by contrast, is “free as in beer”; its only price tag is denominated in torn out hair and gnashed teeth. There may or may not also be performance gains to be had from TF; the jury is currently out.

The general shape of machine learning is this: you construct a model, which is a framework for capturing the features of a problem you want to solve. Then you throw fuckloads of data at it to fit its unfeasibly large number of parameters and provide the solution. That’s the “learning” part, and it can take ages.

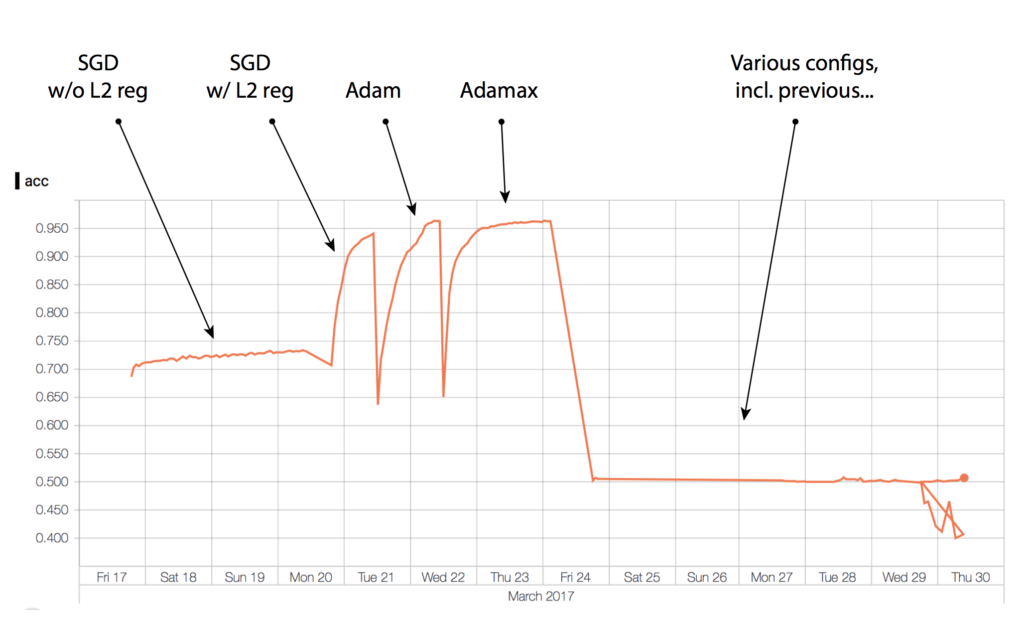

The graph at the top of this post shows multiple learning attempts from different stages of the model development. The details aren’t important, what matters is that high scores are good, while values down at 0.5 mean you might as well be tossing a coin. The first few attempts worked quite nicely, the training process producing increases in accuracy as expected. Then at some point about a week and a half ago the whole thing just hit a wall. Training stopped working completely. Nothing was learned. And—and this is where the weeping and rending garments begins—that remained so even when the entire codebase was reverted to its state at the time when it was all working.

Many hours of fruitless unpicking, debugging, hacking and changing ensued, the kind of tedious, frustrating and unproductive developmental dysfunction that makes the whole business of computing seem like a horrible mistake that should immediately be cast aside in favour of making an honest living as a yak herder.

To cut a long and thoroughly unedifying story short, the problem ultimately seems to come down to messages like this:

Allocator (GPU_0_bfc) ran out of memory trying to allocate 2.30GiB. The caller indicates that this is not a failure, but may mean that there could be performance gains if more memory is available.

What this is saying is that the system ran out of memory on the graphics card. In theory it should at that point instead allocate parts of the operation graph on the computer’s main memory and mediate between them. But it doesn’t. When it says this is not a failure, it is lying. I’m not sure why it would lie about such a thing, but there it is, barefaced and shameless.

"The caller indicates that this is not a failure, but may mean that there could be performance gains if more memory is available."

— Delip Rao e/σ (@deliprao) August 4, 2016

Subsequently, for whatever reason of failed synchronisation between memory compartments, the slightly-too big model never bloody learns. (There is probably a moral here for us all.)

The solution is correspondingly straightforward: reduce memory requirements by processing the data in smaller batches. A slightly anti-climactic conclusion to a week of thorough vexation, but at least it’s all working again. Hey ho.